Building cloud infrastructure manually is often slow, error-prone, and difficult to scale.

In one of my recent projects, I encountered a repetitive operational challenge: creating EC2 instances manually, configuring JupyterHub on each server, wiring correct IAM roles, and provisioning dedicated S3 buckets per client.

Each setup took several hours due to coordination across multiple AWS services.

This case study explains how I fully automated the workflow, reducing the provisioning time to under 3 minutes, while also improving reliability and consistency.

The Initial Problem

The organization onboarded new client into their learning platform.

For each client, the DevOps team manually performed:

- Create an S3 bucket for uploads

- Create an IAM role for the EC2 instance

- Attach a policy granting access to that bucket

- Launch a new EC2 instance

- Install & configure JupyterHub on it

- Tag and validate infrastructure

- Share instance details back to platform admin

What should have been routine provisioning instead became:

- Slow (1–2 hours each)

- Manual + repetitive

- Prone to misconfiguration

- Dependent on a senior engineer’s time

This was clearly a candidate for automation.

Engineering Goals

I defined these automation goals:

- Zero-touch provisioning for each new client

- Reusable orchestration service that ties AWS SDK modules together

- Consistent IAM security across all deployments

- Event-driven workflow instead of human-triggered steps

- Complete setup under minutes

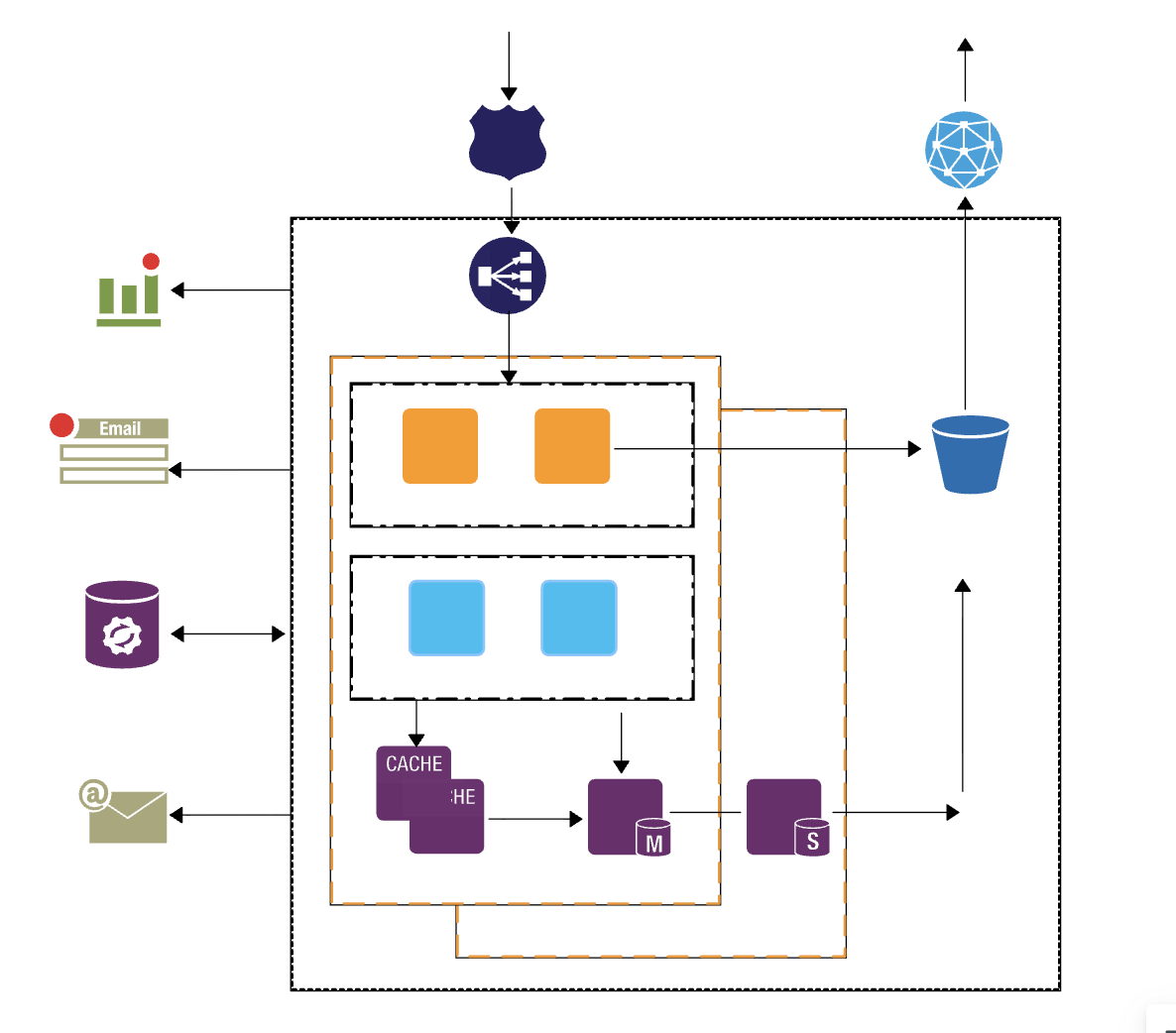

Architecture Overview (Automated Flow)

Here’s the final automated architecture explained in steps:

-

API Request Received –

Client calls/addNewEC2with a client name. -

S3 Bucket Created –

A bucket is generated for file uploads. -

IAM Role Generated –

A role likerole-Ais created dynamically. -

IAM Policy Attached –

Auto-generated policy grants access to that specific bucket. -

EC2 Instance Provisioned –

An instance deploys with a JupyterHub bootstrap script. -

UserData Handles Server Setup –

- Installs AWS CLI

- Installs JupyterHub (TLJH)

- Sets admin user

- Prepares environment

-

Client Receives Success Response

Every step is orchestrated by a single backend service call.

Why This Automation Was Necessary

From an architectural standpoint:

- Manual provisioning doesn't scale with onboarding velocity.

- Human-dependent steps increase blast radius for mistakes.

- Security policies must be deterministic, not remembered.

- Engineering time must be spent on value, not repetition.

- Infrastructure that can be automated should be automated.

By implementing this pipeline, I transformed an operational workflow into a repeatable, auditable, and reliable service.

Implementation (Full Automated AWS Orchestration)

The following generic TypeScript service uses AWS SDK v2 to automate bucket creation, role creation, policy linking, and EC2 provisioning.

interface ProvisionEC2Params {

instanceName: string;

imageId: string; // AMI ID

instanceType: string; // EC2 type

keyName: string; // SSH Key

userDataScript?: string; // Optional bootstrap script

}

async function provisionEC2Instance(params: ProvisionEC2Params, res) {

const { instanceName, imageId, instanceType, keyName, userDataScript } = params;

// 1. Create S3 Bucket

s3.createBucket(

{ Bucket: instanceName },

async (error, success) => {

if (error) {

console.log("Error creating bucket:", error);

return;

}

}

);

// 2. Create IAM Role

const roleCreated = await createRole(instanceName);

// 3. Attach IAM Policy

const policyUpdate = await putRolePolicy(

`role-${instanceName}`,

`policy-${instanceName}`,

instanceName,

roleCreated

);

if (policyUpdate["$metadata"]["httpStatusCode"] === 200) {

const roleResponse = await iam.getRole({ RoleName: `role-${instanceName}` }).promise();

// 4. Delay to ensure eventual consistency

setTimeout(async () => {

const instanceParams = {

ImageId: imageId,

InstanceType: instanceType,

KeyName: keyName,

MinCount: 1,

MaxCount: 1,

IamInstanceProfile: { Name: roleResponse.Role.RoleName },

TagSpecifications: [

{

ResourceType: "instance",

Tags: [{ Key: "Name", Value: instanceName }],

},

],

UserData: Buffer.from(

userDataScript || "#!/bin/bash\n# Add your custom bootstrap commands here",

"utf-8"

).toString("base64"),

};

// 5. Launch EC2

const instancePromise = ec2.runInstances(instanceParams).promise();

const data = await instancePromise

.then((data: any) => data)

.catch((err) => {

console.log("Error launching EC2:", err);

});

if (!data) {

res.status(400).json({ status: "error" });

return;

}

res.status(200).json({ status: "success" });

}, 5000);

}

}Conclusion

Automating this provisioning workflow transformed what was previously a long, repetitive, and error-prone process into a predictable and efficient system. By integrating S3 provisioning, IAM role generation, policy assignment, EC2 orchestration, and JupyterHub installation into a single automated flow, the organization gained both speed and consistency.

The new pipeline brings measurable benefits: faster onboarding, reduced manual involvement, improved security through deterministic IAM policies, and a scalable approach that supports future growth without additional operational burden. What once took hours now completes in a matter of minutes, allowing teams to focus on product improvements instead of routine setup tasks.

This automation not only solves an immediate operational bottleneck but also establishes a foundational pattern for future infrastructure workflows that need to be reliable, repeatable, and easy to maintain.

Read more

Automating EC2 & JupyterHub Provisioning: Reducing Deployment Time from Hours to Minutes

A senior-engineer case study on converting a manual AWS provisioning workflow into a fully automated system using AWS SDK, IAM, S3, and EC2 orchestration.

Architecting a Scalable Learning Management Platform with React, Node.js, and AWS

A case study on migrating a monolithic LMS to a modular, microservices-inspired architecture with high scalability, real-time analytics, and multi-role support.

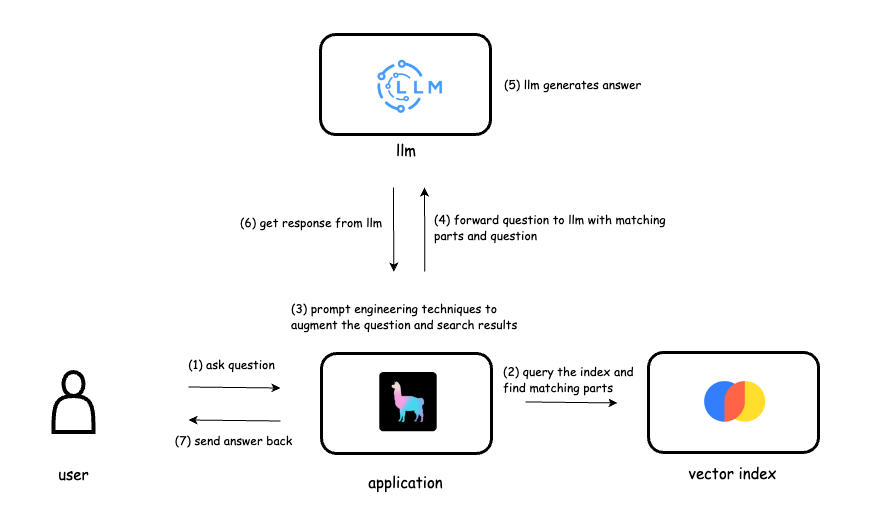

Building a RAG Application for AI-Powered File Interaction

A case study on developing a Retrieval-Augmented Generation (RAG) system that allows users to interact with AI trained on uploaded documents.